Select the Spark Python 3.6 runtime system.Įnter the following URL for the notebook:Ĭlick Create Notebook. On the New Notebook page, configure the notebook as follows:Įnter the name for the notebook (for example, ‘getting-started-with-pyspark’). Then, click Load.ĭrag the files to the drop area or browse for files to upload the data into Watson Studio.Ĭreate a Jupyter Notebook and change it to use the data set that you have uploaded to the project. If not already open, click the 1001 data icon at the upper right of the panel to open the Files sub-panel. Select the data sets: 5000_points.txt and people.csv and download the files to your local desktop. Next, you’ll download the data set and upload it to Watson Studio. If it is the only storage service that you have provisioned, it is assigned automatically. In the New project window, name the project (for example, “Getting Started with PySpark”).įor Storage, you should select the IBM Cloud Object Storage service you created in the previous step. Click either Create a project or New project.Then, click the Object Storage tile.Ĭhoose Lite plan and Click Create button.įrom your IBM Cloud account, search for “Watson Studio” in the IBM Cloud Catalog. If you do not already have a storage service provisioned, complete the following steps:įrom your IBM Cloud account, search for “object storage” in the IBM Cloud Catalog.

Create IBM Cloud Object Storage serviceĪn Object Storage service is required to create projects in Watson Studio. It should take you approximately 60 minutes to complete this tutorial. To complete the tutorial, you need an IBM Cloud account. Use Machine learning with MLlib library.Use SQL queries with DataFrames by using Spark SQL module.The data set has a corresponding Notebook. We’ll use two different data sets: 5000_points.txt and people.csv. This tutorial explains how to set up and run Jupyter Notebooks from within IBM Watson Studio.

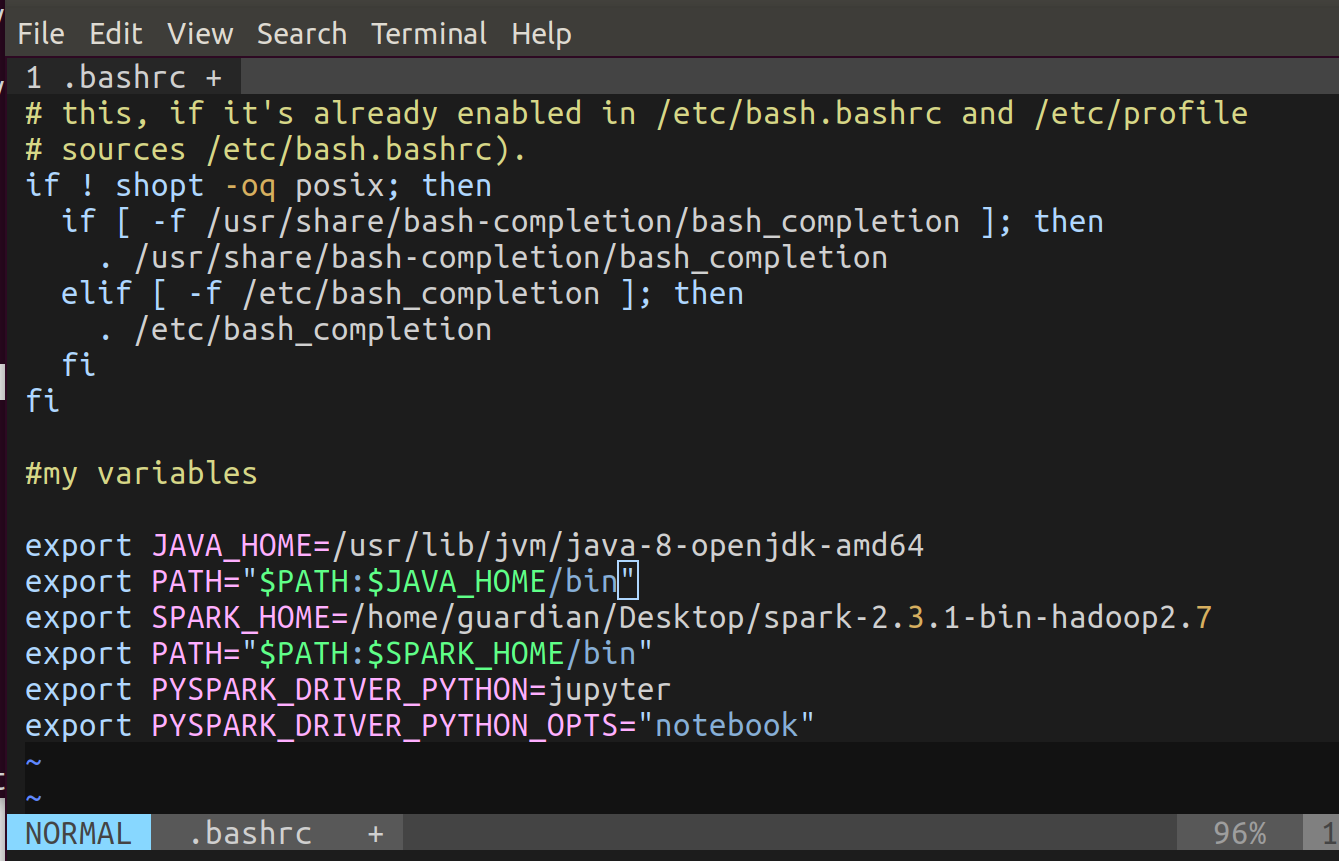

Using PySpark, you can work with RDDs in Python programming language. PySpark is a parallel and distributed engine for running big data applications. PySpark has similar computation speed and power as Scala. To support Python with Spark, the Apache Spark community released a tool, PySpark. Apache Spark is written in Scala programming language. Spark SQL is Apache Spark’s module for working with structured data and MLlib is Apache Spark’s scalable machine learning library. The main abstraction Spark provides is a resilient distributed data set (RDD), which is the fundamental and backbone data type of this engine. Apache Spark is a fast and powerful framework that provides an API to perform massive distributed processing over resilient sets of data.

0 kommentar(er)

0 kommentar(er)